Understanding the Five Essential Components of Kubernetes

Written on

Introduction to Kubernetes

Before diving into Kubernetes (K8s), it’s important to grasp its fundamental components and concepts. This guide outlines five vital elements of Kubernetes that you should familiarize yourself with before launching your initial cluster.

What is Kubernetes?

To start, let's clarify what Kubernetes is. It is an open-source platform designed for managing and orchestrating containerized workloads. The term "Kubernetes" translates to helmsman or pilot, which aptly reflects its role in navigating complex microservices applications composed of numerous Docker containers. As applications expand, managing all these containers can become overwhelming. Kubernetes alleviates this burden by providing a systematic approach to container management. While Docker is still utilized, Kubernetes automates the management of ports, service connections, and ensures seamless functionality across hundreds of services.

Nodes Explained

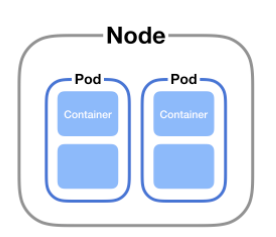

A Node refers to either a physical or virtual machine that has Kubernetes installed. Each Node is overseen by the control plane, which orchestrates the lifecycle of containers and includes the necessary services to execute Pods that contain containers.

Pods Demystified

A Pod is essentially a collection of containers that share the same storage, network, resources, and execution instructions. Pods reside within Nodes, making Nodes a higher-level construct in the Kubernetes architecture.

When multiple containers operate within the same Pod, they communicate via localhost due to the shared context provided by a Pod, which consists of Linux namespaces and groups. In Docker terms, a Pod resembles a group of containers that share namespaces and filesystem volumes.

Understanding Clusters

A cluster is simply a collection of Nodes working collaboratively to host your containerized workloads. For example, in an AWS environment, this might consist of a group of EC2 instances or, in a bare-metal scenario, a cluster of Raspberry Pis. Managing a cluster involves ensuring that Nodes remain live and ready for application hosting. Recently, I created a Kubernetes cluster using a fleet of Raspberry Pis, which presented several challenges in establishing a local network and properly configuring Kubernetes. Fortunately, these complexities are often handled for you when utilizing a cloud provider.

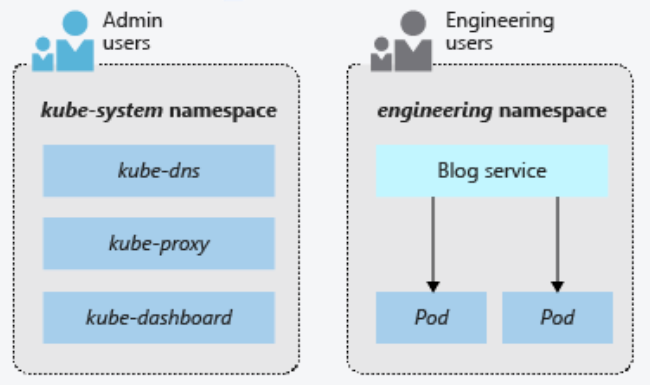

Namespaces Unpacked

As we discussed Clusters as a group of Nodes, it’s worth noting that Namespaces serve a similar purpose. A Namespace acts as a virtual cluster within your Kubernetes Cluster, allowing for the segmentation of cluster resources among various applications or users. You can create multiple custom namespaces for your applications or stick with the default namespace. Importantly, Namespaces cannot be nested, and each Kubernetes resource can only belong to a single namespace.

Services in Kubernetes

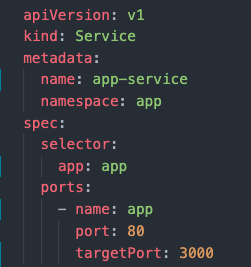

A Service is a mechanism for exposing applications running on a set of Pods. Services are fundamental to Kubernetes' networking capabilities. Kubernetes automatically assigns an IP address to your Pods. For instance, if you have a Pod with a container running on port 3000, Kubernetes will allocate an IP address (e.g., 10.0.0.1) to that Pod. You can then access the container via 10.0.0.1:3000. However, this IP address may change if the Pod is recreated. To address this, Services provide a consistent DNS name that remains unchanged for a set of Pods, acting as a load balancer.

The DNS naming convention for a service typically follows this pattern:

http://<Service Name>.<Namespace>.svc.cluster.local

You might be curious as to why there is no port specified in this URL. Below is a code snippet illustrating a Service:

The service in this example has two ports: one named "port" listening on port 80, and another named "targetPort" listening on port 3000. The "port" field refers to the internal port within Kubernetes, which is why the domain name does not include a port.

Here’s a code snippet for a Pod that utilizes the Service mentioned above:

In this snippet, the containerPort is set to listen on port 3000, aligning with the targetPort defined in the Service. This alignment is crucial for Kubernetes' DNS resolution. As previously noted, the port 80 is designated for internal Kubernetes access, enabling the service to manage requests for multiple Pods effectively.

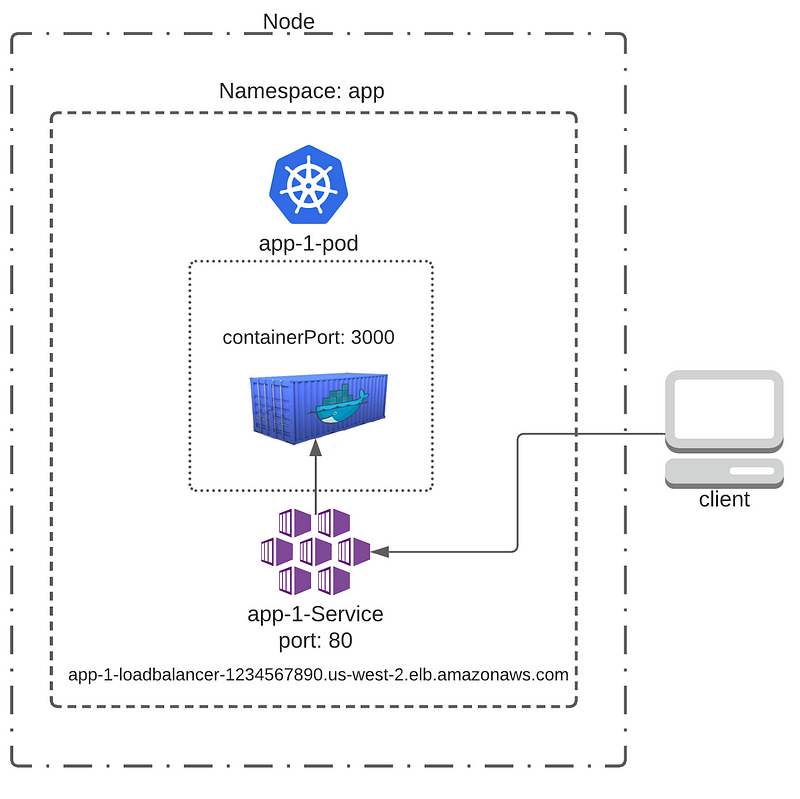

An example of incoming traffic to the Cluster from an external user to a container running on port 3000 is illustrated below:

When a user accesses the endpoint "app-1-loadbalancer-1234567890.us-west-2.elb.amazonaws.com," it resolves to the Service, then the Pod, and finally the container on port 3000.

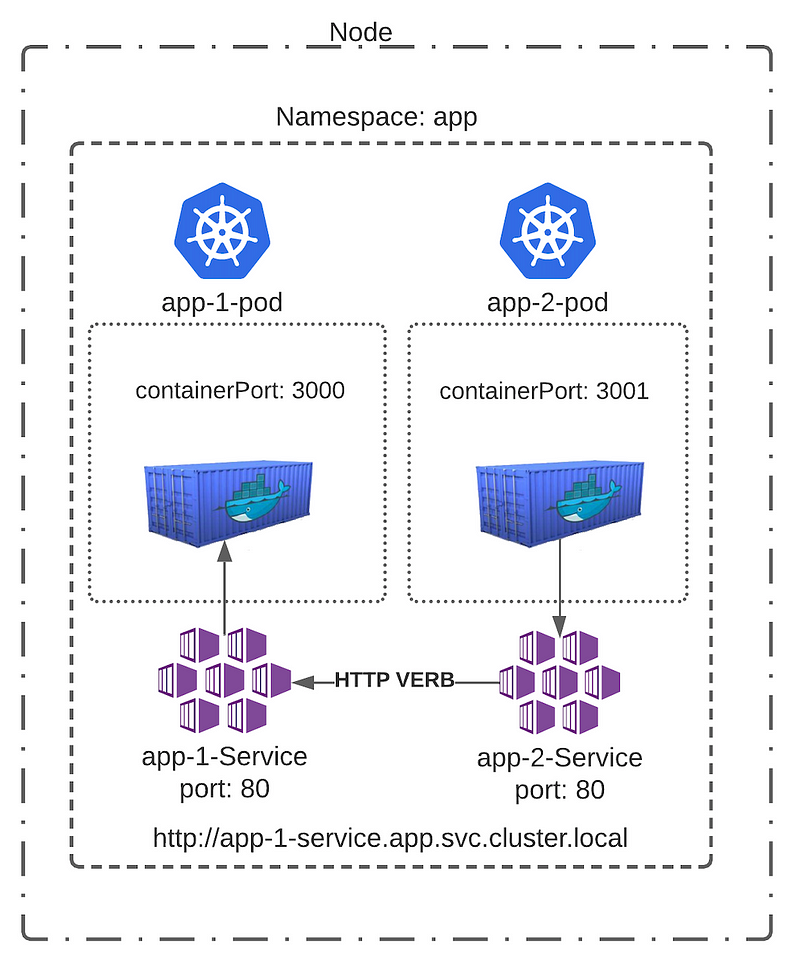

Additionally, here’s how Pod-to-Pod communication occurs through a Service:

This diagram summarizes the five essential components of Kubernetes. It illustrates a Cluster containing one Node with two Pods in the "app" Namespace. Each Pod has a single container, one listening on port 3000 and the other on port 3001, with two Services operating on port 80. In this setup, "app-2" sends an HTTP request to "app-1," routed through a Service identified by the endpoint "http://app-1-service.app.svc.cluster.local." This Service directs the traffic to "app-1" on port 3000.

Summary

Kubernetes is a complex system that requires time and effort to understand fully. If you found this guide helpful, consider giving it a clap!

Kubernetes Components Explained

This video provides an overview of Kubernetes components, including Pods, Services, Secrets, and ConfigMaps.

Kubernetes Architecture Deep Dive

In this video, we explore Kubernetes architecture, focusing on components like etcd, schedulers, managers, and nodes.